I have been messing around with my monitors and portrait mode and I want to try out this setup for a while and see how it goes. Creating a dual-monitor wallpaper for Windows 7 on this particular setup manually is fairly tricky so I’m going to share how to do this with Gimp.

I’m going to walk through a rather difficult case here, having screen space to the upper left of the main monitor and making a single image span across while taking in account the monitors real world position. If you just want to have a different wallpaper on your two normally aligned monitors it is much simpler.

Specialized software to do this

If you don’t want to go through all this trouble there are software out there to do most of the work for you. A open-source alternative is Duel Wallpaper from the Dual Monitor Tools software package which can be found on SourceForge: http://dualmonitortool.sourceforge.net/dualwallpaper.html

Also, according to addictivetips, Windows 8 will have at least some support for wallpapers on multiple monitors.

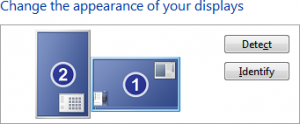

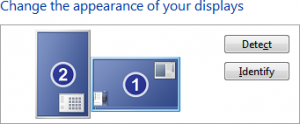

Telling Windows the screens relative positioning

Before starting this you should ensure that Windows know how the two monitors are positioned to each other, as this will affect how the wallpaper has to be done. You can do this by right-clicking on your desktop and click “Screen resolution”.

Notice that you can drag the monitor icons to match how the screens are positioned in real life. This affects how your mouse, windows and wallpaper wraps over to the other monitors, so make sure this is correct if you have differently sized monitors.

If your monitor´s stand allows you to adjust the height of the monitor then it is easier just to make a rough positioning in Windows and then adjust the monitor height to align it precisely.

Understanding Windows 7 wallpaper positioning

There are 5 modes: Fill, fit, stretch, center and tile. The first four will use the same wallpaper on both monitors which makes them unusable for this. The tile option however is based on the main monitor´s upper left corner and repeats from this point and continues repeating onto the other monitors while respecting its relative positioning as explained in the previous step.

That means that if you have two 1280×1024 px monitors side by side showing a 2560×1024 px wallpaper, the main monitor will show the area from 0x0 to 1179×1023 px and the secondary monitor will show the area from 1280×0 to 2559×1023 px.

However if the secondary monitor is to the left of the main monitor, it will still display the right side of the wallpaper! This is because the left monitor is showing the tile left to the main monitor, which is illustrated below with my monitor setup:

So when you have to create your wallpaper, you have to make sure that the resolution is large enough to make sure that two monitors are not going to show the same area. Secondly you have to consider which areas are going to show up on which monitor.

Creating a monitor mask

When you having a more complicated screen setup like shown above it is useful to create a mask showing the areas will be shown on the screen and how they are positioned with pixel accuracy.

First, press Print Screen to take a screen shot. Press Ctrl+Shift+V in Gimp to create a new image containing the screenshot. You should now have something like this:

Notice how the areas which are not covered by your monitors are pitch black. Next, make sure that the image has an alpha channel by right-clicking on the layer and clicking “Add Alpha Channel”. (If it is disabled, it already has one)

Now we want to remove everything except the black areas. To do this, use the Fuzzy Select Tool to select all the black areas. To select more than one area, simply click while holding Shift to add another area to the existing selection.

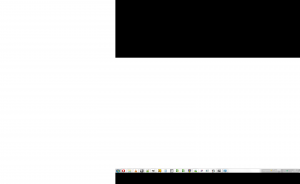

When done, invert the selection by clicking “Select->Invert” (or by pressing Ctrl+i) so that everything except the black areas are now selected. Then press Del to delete everything in the selection which should now become transparent like this:

Taking physical position into account

All normal monitors have an edge all around the screen which makes it impossible to avoid a small gap between each monitor. This makes everything jump a small distance every time something transitions from one screen to another. This isn’t too apparent when it moves horizontally, however it if is diagonally is is a different story as you can see in the following photo:

To get the best results you should takes this gap in account when creating your wallpaper. So find your ruler and start measuring!

When you have found the distance between the monitors we will have to convert this distance into pixels. Since I wasn’t completely sure which PPI my monitor was, I took the low-level approach and created a 10 cm wide image:

Using my ruler I measured the width of the created image on the screen. When it didn’t match, I adjusted the PPI in the advanced settings a bit and created a new image until I found the correct PPI. Once I found it, I created a new image using the width I measured the gap to be using that PPI setting and used the dimensions of the image in pixel as a converting tool. (Interestingly enough, it ended up being 94 PPI.)

To finish your monitor mask, add this new information to it:

You only need to make this mask once so make sure to save it somewhere safe so you can reuse it next time you want a new wallpaper.

Creating a wallpaper

Now the fun finally starts. Find an image and resize and crop it so it has the same dimensions as your monitor mask and place your monitor mask on top of it like this:

The few next (and last!) steps will, based on this image, create the wallpaper image for Windows. As said before, the parts of the image will show up on different screens so this is where the monitor mask comes in handy.

The image needs to be at least the same size as your screenshot. The rules are simple. In the upper-left corner you place the area which is going to be shown on your main monitor. For areas to the right you add it right to the right of the main monitor. For areas to the left you add it to the right edge of the image, moving towards the left side. For areas below you add it right below the main monitor however for areas above you add it at the bottom edge of the image, moving upwards.

The result in my case looks like this:

Conclusion

Windows doesn’t really take multiple monitors in account and this shows up here too. Just making a simple wallpaper like this is a bit of work and generally using the third-party software is probably the best way for most people.

The but is that I’m not sure if it is possible to properly take the gap between the monitors in account with the third-party tools.

For most monitor setups doing it manually should be a breeze however and even with this kind of setup it is not too bad once you tried it a couple of times. It would have been much easier if Windows had used the upper-left corner of the combined monitor area instead though.

Update:

I thought that there was something looking wrong and there was… My monitors are standing at a slight angle to each other and this is making the perspective slightly off. Seriously, this is starting to become rocket science…

Tags: dual-monitor, Gimp, wallpaper, Windows 7